Posts

3305Following

448Followers

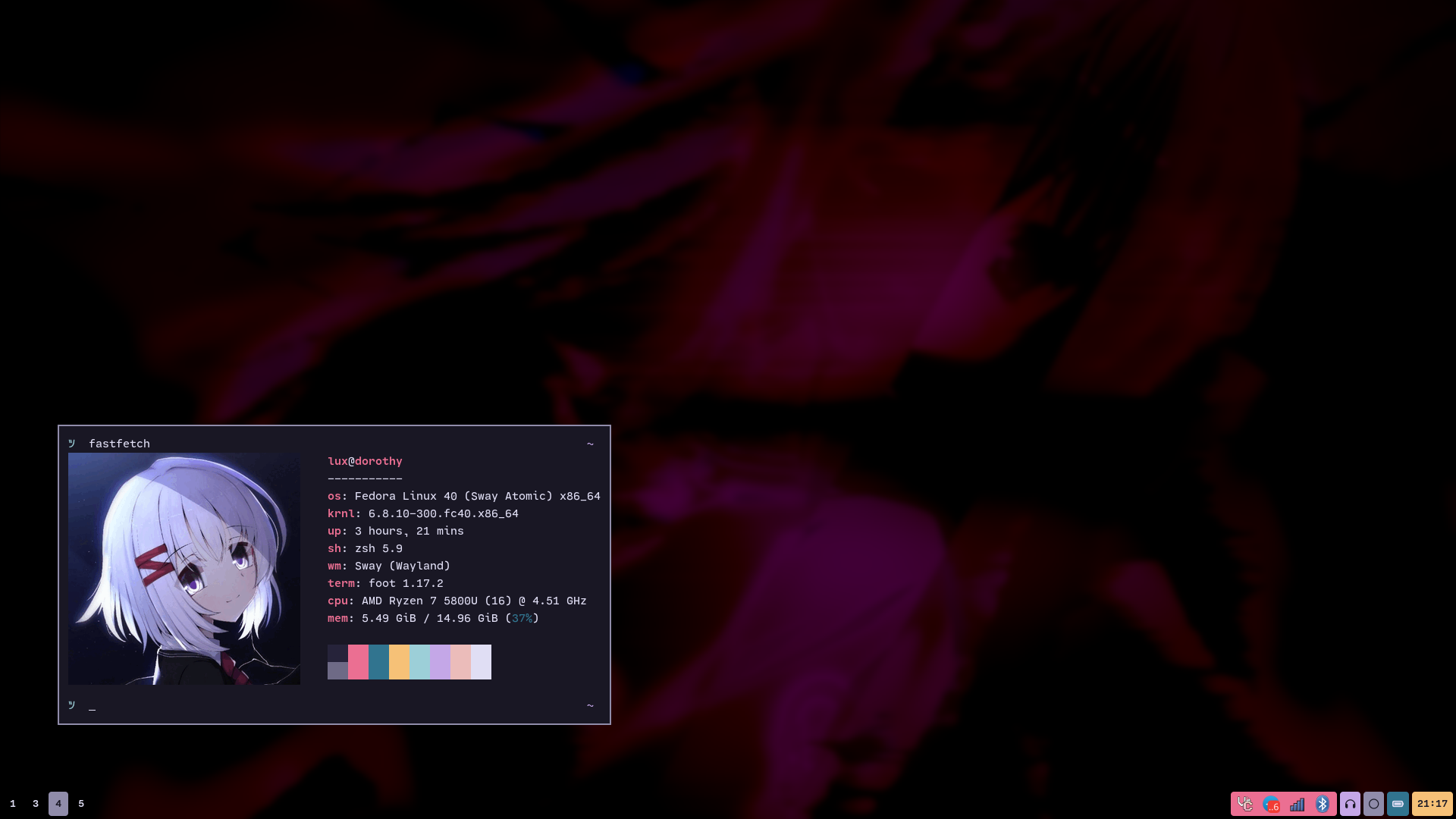

473main: @lux@nixgoat.me

openpgp4fpr:580199C19A147B4D7C58B7935A400026ED2F57FD

Lux

repeated

repeated

Lux

m

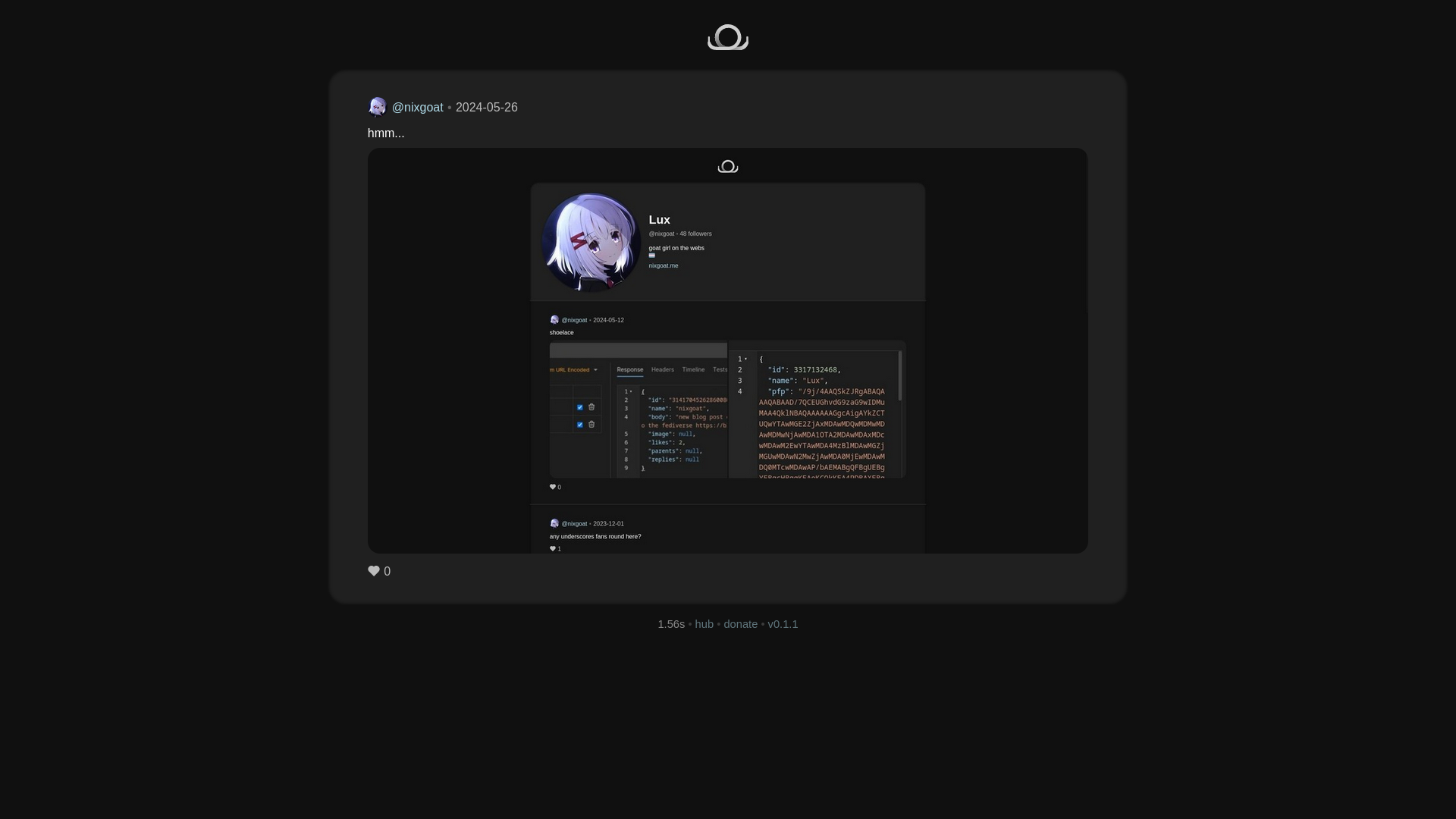

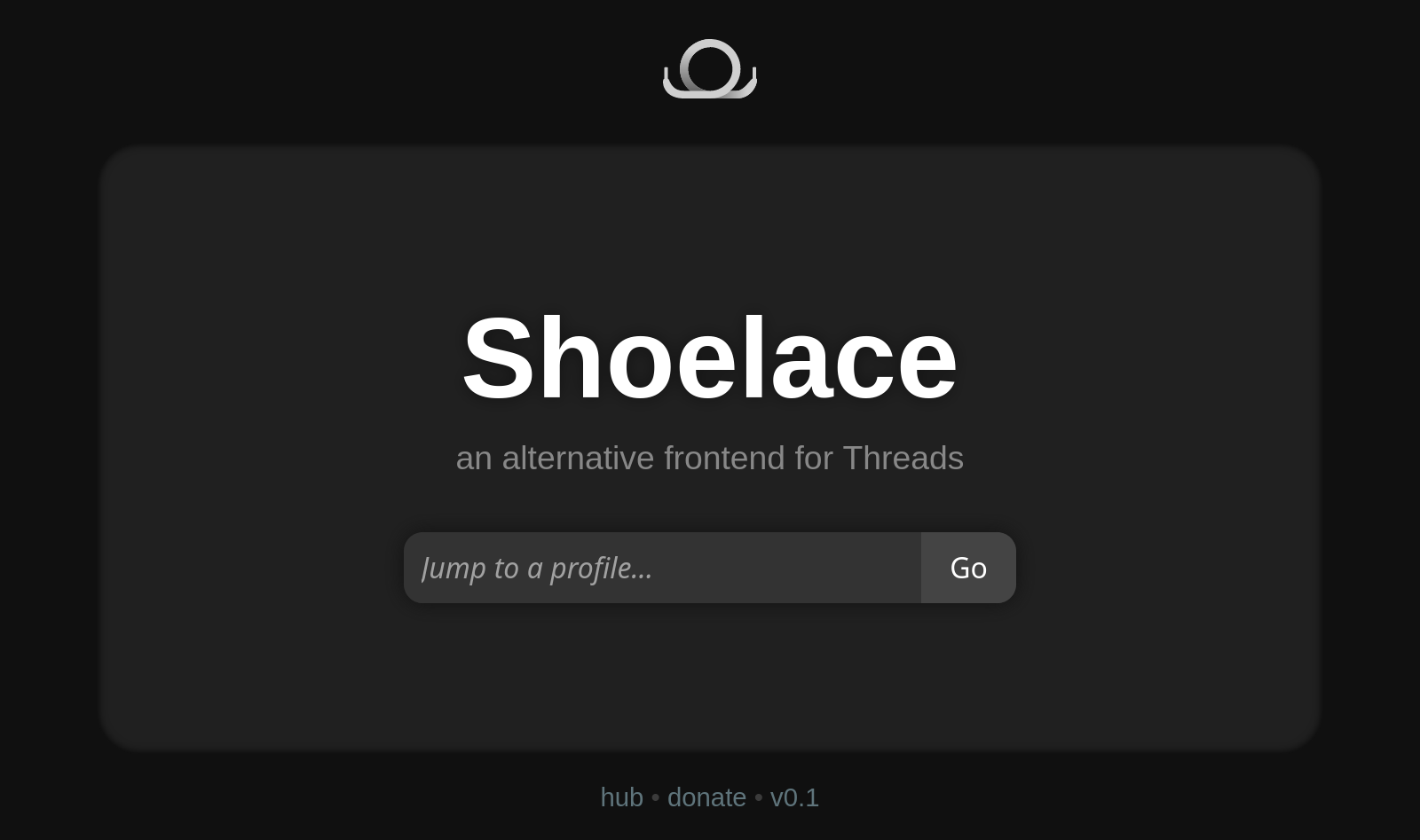

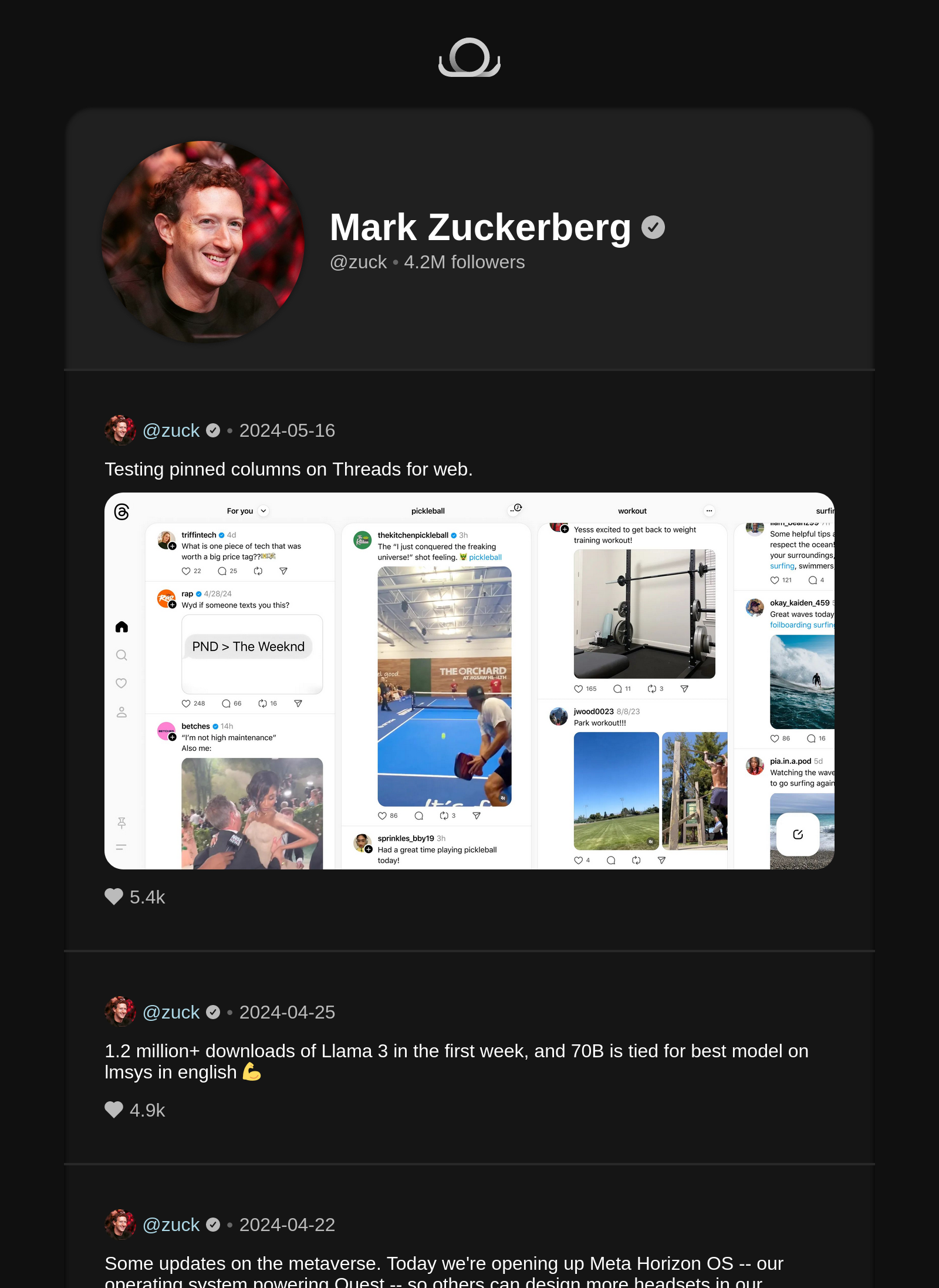

After two weeks of work, I'm announcing the first release of my latest project is up: Shoelace! It's an alternative frontend for Instagram's Threads, written in Rust, and powered by the spools library (made with the help of my girlfriend @nbsp <3).

Check it out at https://sr.ht/~nixgoat/shoelace

The official public instance (at least for now) is located at https://shoelace.mint.lgbt/

Check it out at https://sr.ht/~nixgoat/shoelace

The official public instance (at least for now) is located at https://shoelace.mint.lgbt/

Lux

m

if you started following me after october 2023 and before may 2024. i'd advise retrying to follow me, so you can get my posts

Lux

m

im also realizing returning to this checkpoint might've not been one of the best choices, and has even produced some negative effects on some people. therefore, i'd like to ask the community again: do we reset, or stay with the current state of things? the instance would still stay in the same domain, and might federate better, since some follows are not accounted for in this previous snapshots. if so, i'll give a time period for users to export and archive their data.

0%

reset

0%

stay

Lux

m

Edited 5 months ago

why, you may ask, would it federate better? because we can trigger user deletes, excluding certain usernames between the checkpoint and the corruption, and spin up a new instance in the same place with the same instance keys.

Lux

m

im also realizing returning to this checkpoint might've not been one of the best choices, and has even produced some negative effects on some people. therefore, i'd like to ask the community again: do we reset, or stay with the current state of things? the instance would still stay in the same domain, and might federate better, since some follows are not accounted for in this previous snapshots. if so, i'll give a time period for users to export and archive their data.

0%

reset

0%

stay

Lux

m

Edited 5 months ago

it's been a bit over a week since the coral castle data loss disaster, so imma be giving some recommendations on how to not fuck up like i did to other server admins.

1. if you're upgrading your server, check the hardware you're using. this was caused by a single bad ram stick that i didn't test after install.

2. allow postgres to safely crash if it detects corruption. openrc automatically restarted the service, causing a loop where postgres would reboot and keep writing corrupted pages to storage

3. consider pg_basebackup and wal archiving. back up the whole cluster in longer intervals (weekly, monthly), and then wal can take care of the smaller changes over shorter periods of time (hourly)

4. check whether your backups work correctly. two issues arose after checking one of my backups. first, i was backing up the wrong database (this being the coral castle neo database), and second, the backups were not encoded correctly (using ASCII instead of UTF-8)

that's all everyone. keep your servers safe.

1. if you're upgrading your server, check the hardware you're using. this was caused by a single bad ram stick that i didn't test after install.

2. allow postgres to safely crash if it detects corruption. openrc automatically restarted the service, causing a loop where postgres would reboot and keep writing corrupted pages to storage

3. consider pg_basebackup and wal archiving. back up the whole cluster in longer intervals (weekly, monthly), and then wal can take care of the smaller changes over shorter periods of time (hourly)

4. check whether your backups work correctly. two issues arose after checking one of my backups. first, i was backing up the wrong database (this being the coral castle neo database), and second, the backups were not encoded correctly (using ASCII instead of UTF-8)

that's all everyone. keep your servers safe.

Lux

m

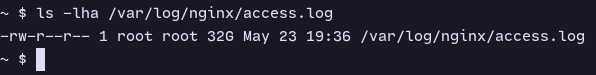

srry for the downtime everyone. server ran out of space, so i had to clean some logs to bring it back up. should be good now, and i'll be starting logrotate to make these not accumulate so much

Lux

m

i am so close to getting a bluetooth jammer and killing off my neighbor's music i swear to god i can't take it anymoreee